Imagine a world where a single glance at your X-ray could reveal not just a blurry image but a detailed, understandable report pinpointing exactly what’s wrong. At Google I/O 2025, that vision took a massive leap forward with the launch of MedGemma, a groundbreaking suite of open-source AI models designed to revolutionize medical diagnostics. This isn’t just another tech gadget—it’s a tool that could make healthcare faster, smarter, and more accessible. But does it really mean doctors are becoming obsolete? Let’s dive into what MedGemma is, how it works, and why it’s sparking both excitement and debate.

At its core, MedGemma is a set of AI models built on Google’s Gemma 3 architecture, tailored specifically for healthcare. Think of it as a super-smart assistant that can “read” medical images like X-rays, CT scans, or dermatology photos and combine that with textual data, like patient records, to provide insights. It comes in two flavors: MedGemma 4B, a 4-billion-parameter model that handles both images and text, and MedGemma 27B, a text-only model optimized for deep clinical reasoning. The 4B model, for instance, can look at a chest X-ray and flag potential issues like pneumonia or fractures, while the 27B model can dig into complex medical texts to help with diagnostics or even triage patients.

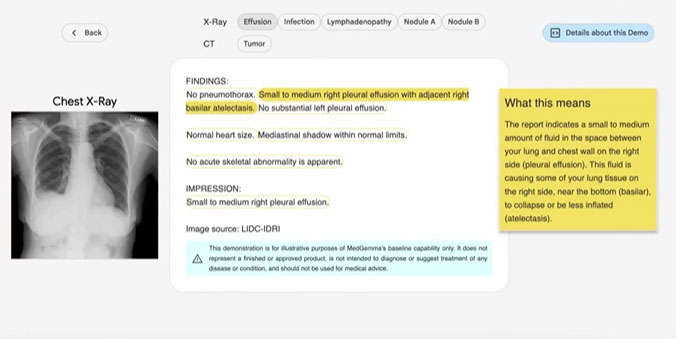

What makes MedGemma so exciting is its ability to simplify the complex. For example, radiologists often spend hours analyzing images and cross-referencing them with patient histories. MedGemma can provide a preliminary assessment in seconds, highlighting areas of concern for doctors to review. In a demo shared online, users can upload an X-ray, and MedGemma generates a clear, jargon-free explanation of what it sees—think “possible fracture in the left rib” instead of a dense medical report. This kind of clarity could empower patients to better understand their health and make informed decisions.

The science behind MedGemma is robust. Its 4B model uses a SigLIP image encoder trained on de-identified medical datasets, including chest X-rays, dermatology images, and histopathology slides. This training allows it to recognize patterns that might escape the human eye, like subtle signs of early-stage lung disease. Studies suggest AI models like this can reduce diagnostic errors by up to 20% in some cases, catching issues humans might miss due to fatigue or oversight. Meanwhile, the 27B model’s text analysis capabilities are fine-tuned to process clinical notes, making it a powerful tool for summarizing medical records or even suggesting treatment options based on the latest research.

But let’s address the elephant in the room: the claim that “most doctors are useless” now. That’s a bold statement, and it’s worth unpacking. MedGemma is designed to assist, not replace, healthcare professionals. It’s like a supercharged calculator for doctors—great for crunching data and spotting patterns, but it doesn’t have the human touch, intuition, or ethical judgment that doctors bring. For instance, while MedGemma can flag a potential tumor on an X-ray, it’s the doctor who sits with the patient, explains the diagnosis, and navigates the emotional weight of the news. AI can’t replicate that, nor can it handle the nuances of a patient’s unique medical history or lifestyle factors that often shape treatment plans.

The open-source nature of MedGemma is another reason it’s turning heads. Developers can access it through platforms like Hugging Face, fine-tuning it for specific tasks like analyzing rare diseases or creating apps for remote clinics. This accessibility could be a game-changer in underserved areas, where radiologists are scarce, and patients often wait weeks for results. In places like rural India or sub-Saharan Africa, where healthcare infrastructure is stretched thin, tools like MedGemma could bring expert-level diagnostics to local doctors via a single GPU, making high-quality care more equitable.

Of course, it’s not all rosy. AI in healthcare raises thorny questions about data privacy, bias, and reliability. MedGemma’s models were trained on de-identified data, but ensuring patient information stays secure in real-world applications is critical. There’s also the risk of over-reliance—doctors might lean too heavily on AI, potentially missing issues the model wasn’t trained to catch. And while Google claims MedGemma is highly accurate, it’s still a developer tool that requires validation for each specific use case. In other words, it’s not a plug-and-play miracle cure.

The buzz around MedGemma isn’t just hype—it’s backed by real potential. Posts on X from healthcare enthusiasts and developers highlight its versatility, with one user calling it “a great step forward in making medical knowledge and diagnostics accessible to everyone.” Another pointed out its efficiency, noting it can run on a single GPU, meaning even small clinics could adopt it. But the excitement comes with a healthy dose of skepticism, as some worry about the gap between tech promise and real-world impact. After all, healthcare isn’t just about algorithms—it’s about trust, empathy, and human connection.

MedGemma’s release marks a pivotal moment in medical AI, offering tools that could make diagnostics faster, more accurate, and more accessible. It’s not here to kick doctors to the curb but to give them a powerful ally in the fight against disease. As this technology evolves, it’s up to us—patients, doctors, and developers—to ensure it’s used wisely, balancing innovation with the human heart of medicine.